LO Extraction

Activating new datasource in LBWE

- Go to LBWE and look for the datasource

- The datasource can be one of the following states

- Click on Inactive . This would make the datasource active. System might ask for a customizing transport. It gives a pop up with MCEX notification. continue and the datasource will be activated.

- The above step will activate the datasource in development client. If development and testing are done in different client, use SCC1 tcode to copy the changes between the clients.

Enhancing existing datasources

Adding field in LBWE

Check if the field you are looking for is present in the datasource structure. Go to LBWE and look at maintenance . If you have the field there, we can directly add the field there by moving it from right to left.

Adding field as an enhancement

If the field is not present in the extract structure, we need to add the field to the append strucuture of the datasource.

There is also a way to add the field to enhance the MC structure for the new field, which I never tried...!!

Adding fields in LBWE

Now that we already have the field in LBWE, all we need is to move the field from right side to left

side. We can do that following below steps.

Step 1

check and Clear the setup tables.

Step 2

Clear delta Q from LBWQ

If there are entries in LBWQ , run the V3 job - this should move entries to RSA7.

You can also delete the queues in LBWQ but you will be missing those entries.

Step 3

Go to LBWE, Click on 'Maintainance'.

click on the fields needed and move them left.Once the field appears in the pool, it can be moved from right side to the left side and they are ready to use.

There will be couple of pop-ups with information, read( if you want) and click okay. Once the field is added, you can see that the datasource becomes read and inactive.

Now click on the datasource and this will activate the datasource and take you to the maintainance screen. The new fields will be hidden by default. You need to remove the check for 'Hide Fields' and field available only in customer exit.

This step will make the datasource to change from Red to Yellow.

Click on Job control ( Inactive ) and this will make it Active.

The field is successfully added to the datasource and is all active.

Note : If you have separate development and dev.testing clients,

you need to copy the customizing changes to test client. ( It is not test system, this is only if you have test client ).

Use TCode SCC1 in the target system and run it for the customizing transport. check 'Including Request Subtasks' also.

Since customizing transports are at client level, they need to be copied to different clients this way.

Possible Errors

If you get the below error , that means that LBWQ is not cleared.

" Entries for application 13 still exist in the extraction strcuture"

Sol :

Run the V3 job and clear the entries in LBWG

Struct appl 13 cannot be changed due to setup table

Sol :

Delete the setup tables in LBWG.

Running Setup Tables

When the tcode for setup tables are run , you might below error.

"DataSource 2LIS_02_*** contains data still to be transferred"

That means that all the data from ECC queues has not been transferred to BW.

So make sure that LBWQ is empty for the extractor ( most of the cases, the V3 jobs runs regularly every 15/30 minutes, so these queues will be cleared). Next, clear the delta queues in RSA7 by pulling them to BW. Sometimes it you might need to run the infopackages multiple times, if there is activity happening in the source system).

Once LBWQ and RSA7 is empty, you can run the t-code and it will go through fine.

Adding fields in structure

Step 1

Go to RSA6 -> Click on the datasource and go to the MC* Structure.

If you want to create a new append structure , click on Append Strucutre - New

Else, go to any of the existing append structures and ad your fields there.

Step 2

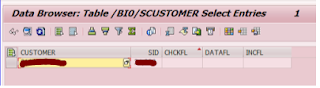

Open the datasource again in RSA6 and you can see that all fields are available in Hidden. Click in edit and unhide the fields.

Step 3

Next code for extracting these fields should be added in CMOD or BADI implementation whichever the company is using.