Dynamic table fields declaration with Reference

Using For the parameters we use the dynamical reference to the data dictionary. This way we do not have to define texts in the text pool, but the field name from the dictionary is used. Character fields to hold the dictionary names data:

* Logon data

t_gltgv(30) value 'BAPILOGOND-GLTGV',

t_gltgb(30) value 'BAPILOGOND-GLTGB',

t_ustyp(30) value 'BAPILOGOND-USTYP',

gltgv like (t_gltgv),

gltgb like (t_gltgb),

ustyp like (t_ustyp),

class like (t_class),

accnt like (t_accnt),

Reference : IAM_USERCHANGE

-------------------------------------------------------------------------------------------------------------------------

REPORT

z_dynamic_read.

DATA:

gt_itab TYPE REF TO data,

ge_key_field1 TYPE char30,

ge_key_field2 TYPE char30,

ge_key_field3 TYPE char30,

go_descr_ref TYPE REF TO cl_abap_tabledescr.

FIELD-SYMBOLS:

<gt_itab> TYPE STANDARD TABLE,

<gs_key_comp> TYPE abap_keydescr,

<gs_result> TYPE ANY.

PARAMETERS:

pa_table TYPE tabname16 DEFAULT 'SPFLI',

pa_cond1 TYPE string DEFAULT sy-mandt,

pa_cond2 TYPE string DEFAULT 'LH',

pa_cond3 TYPE string DEFAULT '0123'.

START-OF-SELECTION.

*

Create and populate internal table

CREATE DATA gt_itab TYPE STANDARD TABLE OF (pa_table).

ASSIGN gt_itab->* TO <gt_itab>.

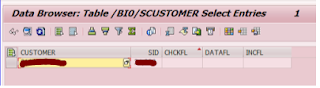

SELECT * FROM (pa_table) INTO TABLE <gt_itab>.

*

Get the key components into the fields ge_key_field1, ...

go_descr_ref ?= cl_abap_typedescr=>describe_by_data_ref( gt_itab ).

LOOP AT go_descr_ref->key ASSIGNING <gs_key_comp>.

CASE sy-tabix.

WHEN 1.

ge_key_field1 = <gs_key_comp>-name.

WHEN 2.

ge_key_field2 = <gs_key_comp>-name.

WHEN 3.

ge_key_field3 = <gs_key_comp>-name.

ENDCASE.

ENDLOOP.

*

Finally, perform the search

READ TABLE <gt_itab> ASSIGNING <gs_result>

WITH KEY (ge_key_field1) = pa_cond1

(ge_key_field2) = pa_cond2

(ge_key_field3) = pa_cond3.

IF sy-subrc = 0.

WRITE / 'Record found.'.

ELSE.

WRITE / 'No record found.'.

ENDIF.

One note: When

an internal table is created dynamically like in my program above, the table

key isnot the key defined in the DDIC structure — instead, the default

key for the standard table is used (i.e. all non-numeric fields).